This opinion piece is written by Patrick Grady from Metaverse EU. It expresses his opinion only and does not reflect the views of Metaverse EU.

Politicians, researchers, and developers trying to understand immersive technology have inadvertently endorsed the most compelling fantasy of all: the concept of “mixed reality”.

Since around 2017, when mainstream virtual reality headsets started to don cameras, mixed reality has become a popular way to describe experiences that appear to combine physical and digital elements. But introducing another vague term to a field that already suffers from ambiguity will only confuse those desperately trying to make sense of it.

Few concepts are easily defined, and that’s not always a bad thing. Slippery concepts like the “metaverse”—a suitcase word containing different meanings—can describe trends, principles, or ideas that change over time. When policymakers start to pay attention, however, semantics matter. How a concept is understood and defined determines how legislation is constructed, applied and implemented, where attention concentrates, and how funding is allocated.

It is concerning, then, that organisations such as the OECD, the World Economic Forum, the European Parliament, and the Centre on Regulation in Europe (an influential Brussels-based think tank) are adopting the term “mixed reality”.

They should abandon it.

Milgram’s Continuum

Virtual reality describes experiences that digitally simulate physical ones. Users of a virtual reality headset, for example, see a virtual environment in place of the physical environment. In virtual reality, virtual objects do not physically exist where they seem to—a kind of illusion.

Augmented reality describes the experience achieved when computers add digital elements to appear atop—augment—the physical world. Virtual objects, such as captions on smart glasses, appear to co-exist in physical space. Unlike virtual reality, these virtual objects depend on the surrounding space and light.

Mixed reality has come to describe experiences that do not neatly fit into these two buckets. Although this umbrella term sought to anticipate a menu of new devices that blend the physical and digital worlds, now it only obfuscates essential differences between them.

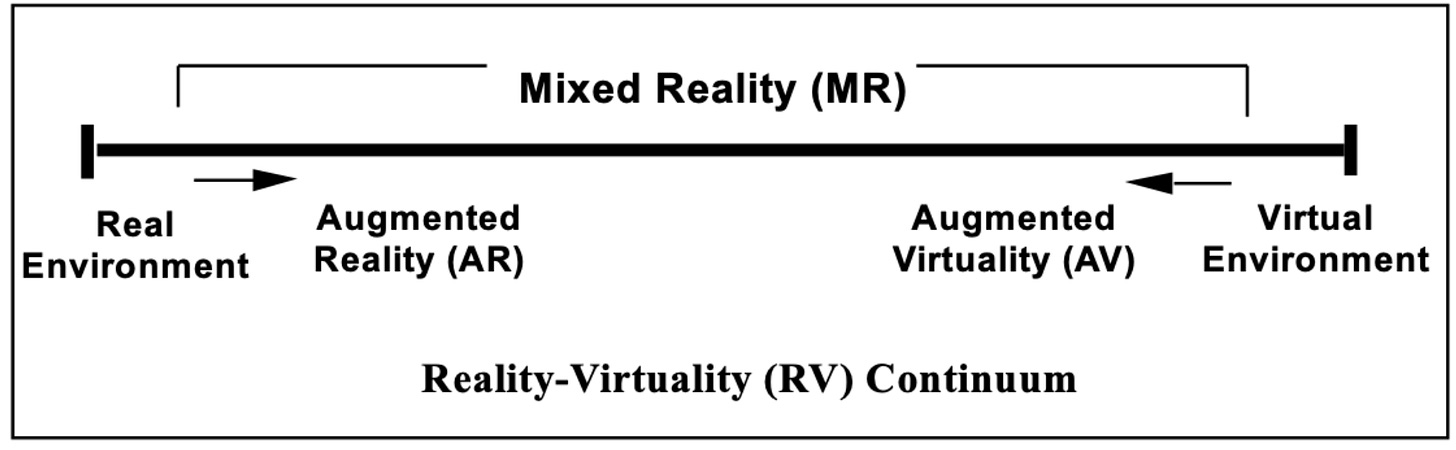

Paul Milgram, a Professor at the University of Toronto, coined the term “mixed reality” in the 1990s, and many who use it today cite the Reality-Virtuality Continuum he presented:

“Physical” has since replaced “real” since virtual experiences are also real—a point philosopher David Chalmers made persuasively.

Milgram’s spectrum includes augmented reality and “augmented virtuality” displays. Unfortunately, the former is too broad and the latter is now unnecessary.

Augmented reality and passthrough displays

For Milgram, augmented reality describes both videothrough displays, which present a live feed of the physical world, and seethrough (transparent) displays, on which digital objects are presented.

Including videothrough—now called passthrough—displays in the definition of augmented reality was a mistake: videothrough displays do not augment the physical world but reproduce and stream it, a significant difference.

To see why, consider BMW’s heads-up display. The display projects a virtual navigation system on a car's glass (seethrough) windshield. The display does not reproduce the view ahead, and the driver’s view of the physical environment ahead (e.g., the road and vehicles) does not depend on the display.

Consider instead that the driver is wearing a passthrough headset with external cameras that achieve roughly the same experience: the driver can see both a virtual navigation system and the road ahead (albeit a digital, live-streamed version). The quality of passthrough displays—the number of pixels, depth and colour—has become so compelling that the illusion the driver is seeing the physical road ahead would be strong.

Yet there are important differences between the headsets that ought to justify different policy considerations.

With the passthrough device, the driver’s representation of the road is mediated by bits and can be manipulated or hacked. The driver may suffer lag, distortion, and motion sickness, creating unique safety hazards. If an opposing driver wears an alternative model, the two drivers may not have a shared experience of the road.

These elements matter for policymaking and apply differently to genuine (seethrough) augmented reality, where the physical environment is shared. Describing both experiences as mixed reality obfuscates these differences.

In the case of a malfunction or an empty battery, the driver wearing a passthrough headset will quickly appreciate the difference between passthrough and seethrough displays.

Passthrough displays are not augmented reality but a form of virtual reality, and augmented reality should not be included under the umbrella of mixed reality.

What about the remainder of the mixed reality continuum?

The redundancy of “augmented virtuality”

In 1993, software engineer P. J. Metzger wrote Adding reality to the virtual, in which he imagined a participant entering a pod wearing a camera-equipped helmet. Through the cameras, the user could see the physical environment around them and “reach out and turn on a switch, watching his real hand perform this task”.

To Milgram, this collusion between the physical environment, the physical hand and the virtual display created “a small terminological problem, since that which is being augmented is not some direct representation of a real scene… but rather a virtual world, one that is generated primarily by computer”.

In the driver example, Meltzger’s headset would not represent the physical world through a simple livestream but instead present an entirely alternative and interactive landscape (BMW has also built just this use case).

So, Milgram coined augmented virtuality to describe “principally graphic” worlds that physical inputs can manipulate.

His paper, which even preceded interactive technologies like Nintendo Wii’s remote controllers (2006) or PlayStation’s EyeToy (2007), could not have imagined the leading virtual reality headsets of today—all of which use passthrough and feature inputs such as eye and hand tracking that let users ‘manipulate’ virtual scenes.

In other words, as virtual reality has evolved, the term augmented virtuality has become unnecessary. What Milgram describes as either augmented virtuality (or videothrough) is not a separate category but state-of-the-art virtual reality: a change in degree, not in kind.

Moving on from “mixed reality”

As augmented and virtual reality technology evolves, new features (e.g., wirelessness, passthrough feeds, eye tracking, neural wristbands) will emerge and present novel policy challenges. Policymakers mustn’t lose sight of their essential differences.

Agreeing to standard definitions is the basis of interoperability and harmonisation, so policymakers should ditch the term mixed reality and instead focus on the unique and emerging features of augmented and virtual reality—and their associated policy consequences, such as the privacy implications of virtual reality devices that use cameras to monitor users and the external environment.

Definitional questions remain. What about non-visual ‘virtual’ experiences (audio, haptics, etc.)? Is mobile augmented reality a form of non-immersive virtual reality? Is extended reality a more suitable umbrella term to capture immersive technology?

Policymakers should approach these questions soberly and resist the allure of trendy marketing language. Sloppy definitions, after all, will create sloppy and ineffectual policies.