Current Approaches to Moderation and Safety in the Metaverse

Ilker Bahar provides this analysis. Ilker is a PhD candidate in Media and Cultural Studies at the University of Amsterdam. He is currently conducting digital ethnography in the VRChat platform, immersing himself in different virtual worlds using a Head-Mounted Display (HMD).

By observing and participating in the daily activities of users, Ilker is examining how VR and immersive technologies are transforming identity, intimacy, and sexuality. His research interests include gender and sexuality, mediated forms of intimacy, internet cultures, inclusion and diversity in online spaces, and digital ethics and governance

The evolution of virtual reality (VR) is significantly transforming how users experience intimacy and social relationships.

Social VR platforms such as VRChat (2014), RecRoom (2016) and Meta Horizon Worlds (2019) provide immersive environments where users present themselves with avatars to play games, chat, watch movies, and interact with people from across different countries. These environments offer unique ways to build and maintain relationships—such as attending a virtual concert on a first date, meditating with a friend in a serene landscape, or exploring ancient Athens with classmates.

Examining user behaviour in VR environments reveals both new challenges and opportunities that come with this emerging technology. Previous research showcased that these immersive experiences can significantly foster empathy, reduce social stigma, and increase inclusivity (Slater et al., 2015; Bailenson et al., 2018).

Yet the advent of social VR platforms also present significant issues such as trolling, stalking, sexual abuse, and hate speech (see: Independent, 2022; Bloomberg, 2024). The immersive nature of these platforms, coupled with the blurred boundaries between virtual and real-world identities, can amplify emotional and psychological responses to these harmful occurrences. Traditional forms of moderation become increasingly inadequate, and given that these platforms are predominantly frequented by younger users, these concerns become even more critical.

Despite the rapid expansion of these virtual environments, policy and regulation have not kept pace. On EU level, the adoption of the Digital Services Act (see: Metaverse EU article), the communication on Web 4.0 and Virtual Worlds (see: Metaverse EU article), and the Citizens’ Panel Report represent critical steps forward addressing these unique challenges. However, there remains a lack of comprehensive research and insight into the safety and moderation of these spaces.

Drawing on my ethnographic research in social VR platforms, in this article I briefly examine the current approaches to moderation and safety employed by RecRoom and VRChat—two of the most widely used social VR platforms with ca. 3 million and 9 million estimated total users respectively (MMO Stats).

Focusing on key areas such as platform governance, age verification, community moderation, and personal safety features, I aim to guide developers and policymakers in adopting effective strategies to ensure that VR environments are safe and inclusive.

The Cases of RecRoom and VRChat

1) Platform Governance

Platforms hosting multiple users will inevitably have to deal with users misbehaving or committing crimes online, so they must agree on guidelines, rules, and resources to curb harmful behaviour.

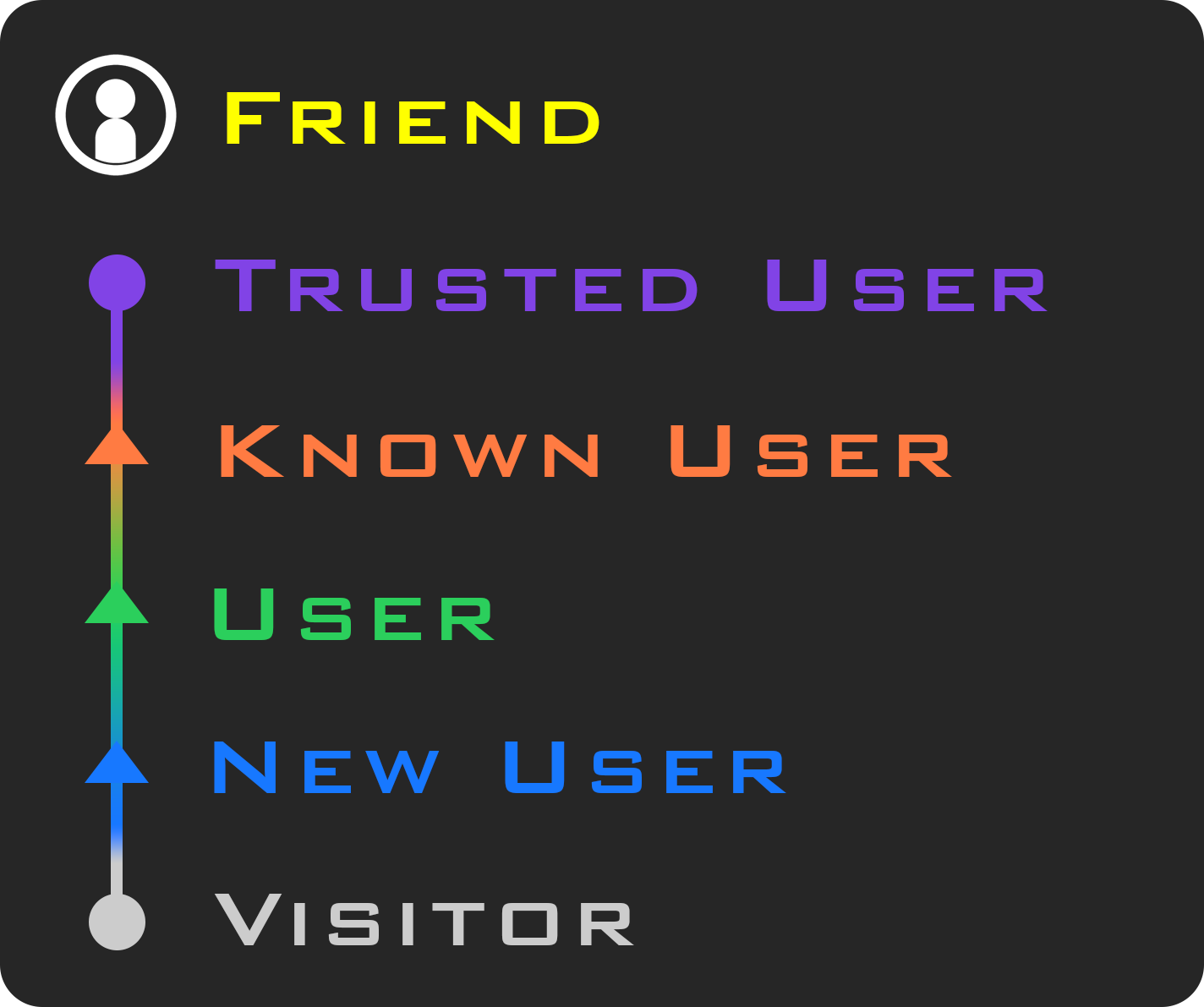

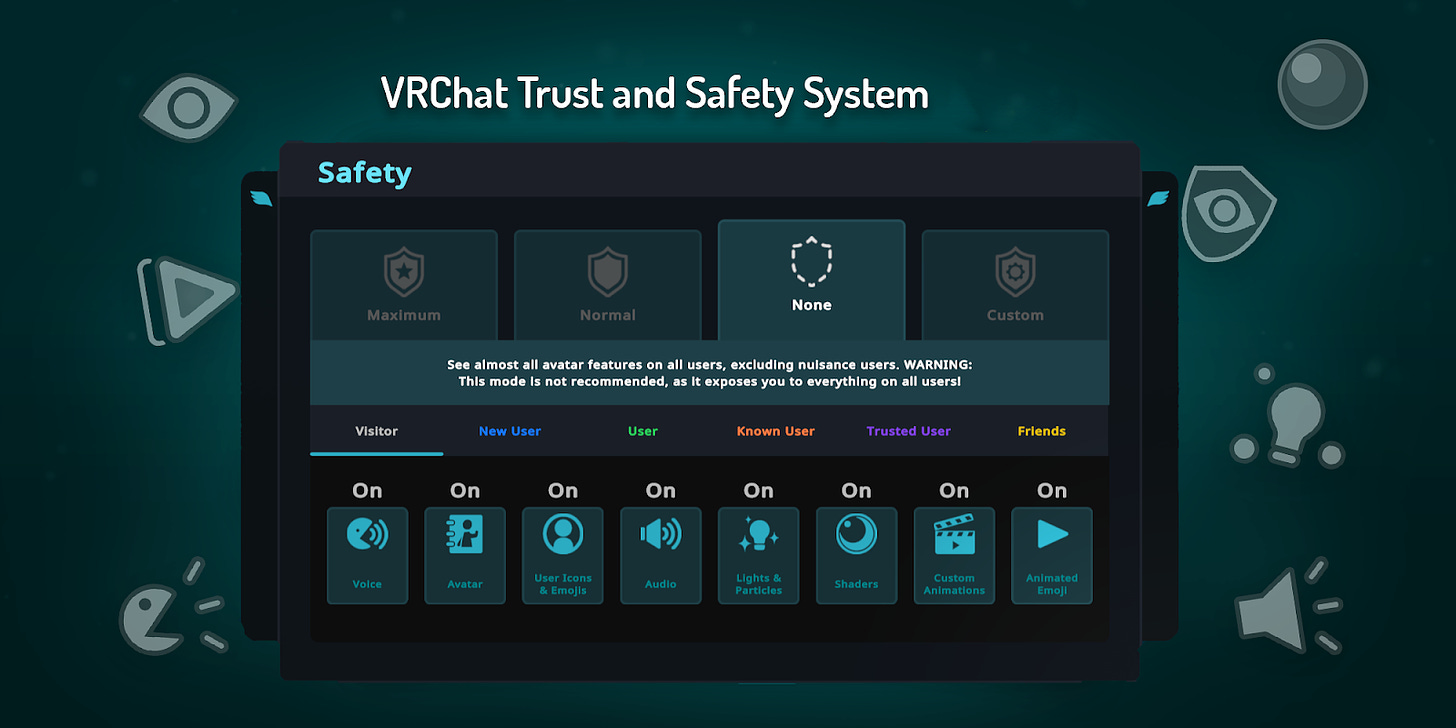

VRChat: Employs a bottom-up approach to moderation, encouraging community moderation and individual reports. Recently, the platform introduced Content Gating System requiring creators to label worlds and avatars containing adult content, graphic violence, gore or horror elements, making these inaccessible to users under 18, based on their registered birthdate. The platform also benefits from a Trust and Safety System that ranks users based on their adherence to community guidelines and experience in the platform. Higher trust levels (such as "Known User" or "Trusted User") unlock additional features such as uploading content to the platform, which motivates users to avoid inappropriate behaviour and engage positively with others.

There is also a special rank called "Nuisance" assigned to users who have been repeatedly muted, blocked, and reported by others for inappropriate behaviour. These users have a visible indicator above their nameplate, and their avatars are fully invisible and muted, preventing them from disrupting others.

RecRoom: Implements a combination of automated and human moderation. The platform uses voice moderation through AI (ToxMod) to detect and filter inappropriate language (available in 18 languages) and their automated systems scan text and images for violations of community guidelines. Human moderators and volunteers complement these efforts by responding to user reports and enforcing community standards. Their role is crucial in addressing more subtle forms of inappropriate behaviour (non-verbal communication, gestures, etc.), such as non-consensual groping of a person’s avatar or performing a Nazi salute, which might easily escape detection by automated systems.

Recently, a community backlash erupted over the platform's automated voice-moderation system, with users criticising it for false detections and infringing on freedom of expression even when they utter “moderate” slang words. In response, platform officials issued a statement defending the system's accuracy. They emphasised that actions are only taken after multiple detections to minimise false positives. The announcement also highlighted that the introduction of voice moderation has led to a 70% reduction in toxic voice chat over the past year.

Moderation & safety challenges: The use of AI automated moderation systems raises important questions about their effectiveness and accuracy. Like other algorithmic systems, they may be prone to discriminatory decisions, such as removing content or banning users in racially or ethnically biassed ways. Automated moderation systems can also struggle to detect more subtle forms of inappropriate behaviour that human moderators might easily catch.

For example, a recent Bloomberg Businessweek report highlighted how child predators in Roblox circumvented automatic chat moderation by using coded language, such as referring to Snapchat with a ghost emoji or using "Cord" instead of "Discord" when inviting minors to these platforms. This demonstrates the limitations of AI-driven moderation in recognising and addressing nuanced or disguised forms of harmful behaviour.

2) Age Verification

Given the presence of adult content on these platforms, it is crucial to implement robust age verification mechanisms to protect younger users from exposure to harmful content.

VRChat: Allows users aged 13 and above, encouraging parental monitoring for those under 18. It implements age gates for certain content, requiring users to confirm they are 18 or older to access mature worlds. Despite these measures, the system relies on self-verification, which is insufficient to prevent minors from accessing harmful content. In July 2024, the platform developers announced plans to partner with a third-party system for age verification to enhance the safety of minors.

Currently, some worlds such as bars and clubs display disclaimers about adult content or employ different age verification methods, e.g., bouncers asking for birth dates. This example illustrates how the 3D and immersive nature of VR interactions allow users to engage in moderation practices akin to real-world settings, through a combination of visual and auditory cues (e.g., bodily gestures and voice). Yet, the effectiveness of these measures in preventing minors from accessing these spaces is still questionable, as for example a user can change their voice using the app VoiceMod to sound older and falsely declare their date of birth.

RecRoom: The platform offers a Junior Mode for users under 13, encouraging them to add their parent's or guardian’s email address. Junior Mode restricts communication with strangers via text or audio and limits access to certain content. However, many users under 13 still join the platform using non-junior accounts if they are not detected by moderators or reported by other users. The platform's age verification method, which requires a $1 payment through a third-party system called Stripe, is reportedly bypassed by juniors who simply create new accounts with incorrect birth dates.

Moderation & safety challenges: Third-party verification processes raise significant concerns with regards to data privacy and protection as for example identifiable personal information might be leaked if the user is supposed to scan their personal ID to verify their age.

3) Community Moderation

Social VR platforms also offer more autonomous and decentralised ways of moderating and governing one’s immediate social circle and interactions.

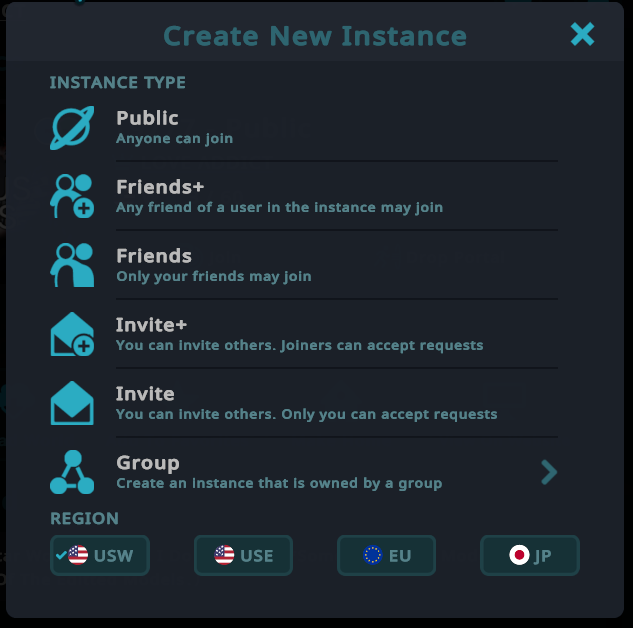

VRChat: The platform offers audience segregation tools through the “instance system”, enabling users to create parallel lobbies or rooms of a world based on the privacy they prefer and the users they want to interact with. For example, they can create a "Friends" instance of a world, allowing only their friends to join them while blocking others from teleporting to their location. They can also form groups and host events in “Group” instances, where they can manage and moderate content with the authority to warn, mute, kick, or ban unwanted users from the world. Compliance with the platform's code of conduct in private instances is relatively flexible, provided that all parties involved consent to the nature of the activities within the instance.

Audience segregation is crucial in maintaining a safe and enjoyable experience on the platform, as it allows users to control their social environments and limit exposure to unwanted interactions. Certain communities and individuals also engage in vigilante behaviour trying to maintain peace and safety in the platform, sometimes resulting in very unique initiatives such as the famous Local Police Department (LDP). LDP is a community formed in 2018, whose members roleplay as police officers and loosely address issues such as harassment in public worlds, combining elements of entertainment with a bottom-up form of community moderation.

RecRoom: Similarly features audience segregation mechanisms through Public and Private Rooms. Users can create private rooms where only invited players are allowed to enter. Otherwise, joining a public room places the user in an instance of the room with other players already present. Users also have their personal “Dorm Room” where they can enjoy their privacy or invite their close friends to pass time together. Similar to the groups in VRChat, they can launch their own Clubs and moderate them according to their preferences. They can invite the members of the club to customised rooms (Clubhouse) and spend time with like-minded individuals in a well-curated and controlled social space.

Moderation & safety challenges: Audience segregation raises important questions about the extent of freedom allowed in private rooms. While these spaces can provide consenting adults with a private venue for sexual and romantic intimacy, the lack of effective age verification mechanisms means that users of all ages can access private instances on these platforms. This can result in minors entering spaces where harmful conduct may occur.

4) Personal Safety Measures

Platform users are provided with certain features to self-moderate their environment by controlling what they see and hear, and how they feel their presence in these 3D spaces.

VRChat: Offers tools for personal safety, including blocking and muting disruptive users to prevent unwanted interactions. The votekick feature enables players to collectively remove users causing trouble in a room. The users can also report inappropriate behaviour to the VRChat staff. The abovementioned Trust and Safety System allows users to customise settings for each trust rank, controlling aspects such as voice chat, avatar visibility, custom emojis, and sound effects to enhance protection against malicious content (e.g., muting all users with “Visitor” rank). By toggling Safe Mode in their launchpad, users can also disable all features for all surrounding users, adding an extra layer of security.

RecRoom: Includes personal safety measures such as a personal space bubble that makes others’ avatars invisible when they cross a set boundary, a stop hand gesture to instantly hide nearby avatars, and options to mute, block, or votekick disruptive users. Users can also report harassment or inappropriate behaviour to the platform staff.

Moderation & safety challenges: As these platforms grow, individual reports become more difficult to process in short time periods.

Towards Safe and Inclusive Policies

As VR environments become more accessible and widespread with the advent of more affordable consumer headsets, the opportunities and challenges presented here will grow even more relevant. As this article showcased, social VR platforms implement various innovative measures to enhance safety and moderation for the users, trying to strike a balance between privacy and surveillance; however, much work remains to be done.

For example, platforms can deploy professional awareness teams in public worlds to support the community in cases of inappropriate behaviour and guide users in addressing their concerns. Educational workshops, videos, and more comprehensive and mandatory onboarding tutorials can inform users about the unique challenges and issues they might experience in virtual environments. As recommended in its Citizens’ Panel, the EU can introduce voluntary certificates for virtual world applications to inform users about their safety, reliability and security.

As the regulatory landscape evolves with new initiatives, ongoing collaboration between platform developers, researchers, policymakers, and the users will be essential to ensure that VR is a safe and inclusive space for all.